{

"cells": [

{

"cell_type": "markdown",

"id": "0b45c495",

"metadata": {},

"source": [

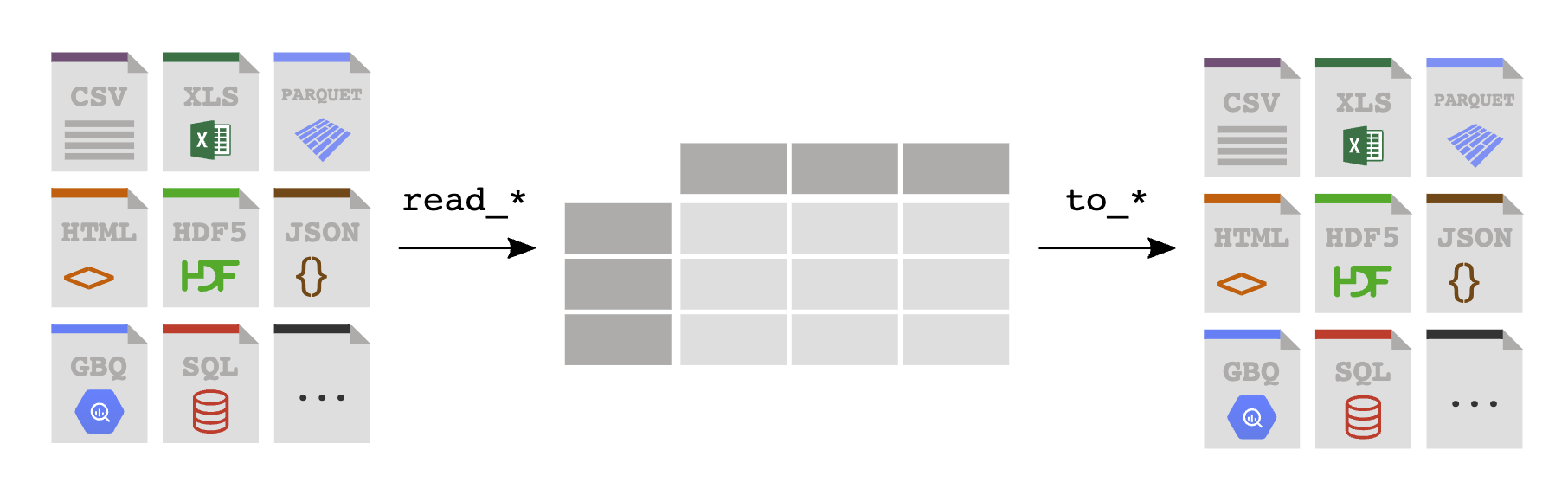

"# Lecture 03. Data Loading and Cleaning\n",

"\n",

"### Instructor: Luping Yu\n",

"\n",

"### Mar 12, 2024\n",

"\n",

"***\n",

"\n",

"Accessing data is a necessary first step for using most of the tools in this course. We are going to be focused on data input and output using pandas.\n",

"\n",

"***\n",

"## Reading and writing data in text format\n",

"\n",

"pandas features a number of functions for reading **tabular data** as a DataFrame object.\n",

"\n",

"\n",

"\n",

"\n",

"The following table summarizes some of them, though read_csv is likely the ones you'll use the most.\n",

"\n",

"|Function | Description |\n",

"|:- | :- | \n",

"|**read_csv** | Load delimited data from a file, URL, or file-like object; use comma as default delimiter\n",

"|**read_excel** | Read tabular data from an Excel XLS or XLSX file\n",

"|**read_stata** | Read a dataset from Stata file format\n",

"|**read_sas** | Read a SAS dataset stored in one of the SAS system's custom storage formats\n",

"|read_html | Read all tables found in the given HTML document\n",

"|read_json | Read data from a JSON (JavaScript Object Notation) string representation\n",

"|read_pickle | Read an arbitrary object stored in Python pickle format\n",

"|read_sql | Read the results of a SQL query (using SQLAlchemy) as a pandas DataFrame\n",

"\n",

"***\n",

"\n",

"### Reading and Writing .csv (comma-separated values)\n",

"\n",

".csv is a delimited text file that uses a **comma** to separate values. A .csv file typically stores **tabular data** (numbers and text) in **plain text**.\n",

"\n",

"Let‘s start with a small .csv text file: ex1.csv"

]

},

{

"cell_type": "code",

"execution_count": 1,

"id": "6f0d159d",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" a | \n",

" b | \n",

" c | \n",

" d | \n",

" message | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1 | \n",

" 2 | \n",

" 3 | \n",

" 4 | \n",

" hello | \n",

"

\n",

" \n",

" | 1 | \n",

" 5 | \n",

" 6 | \n",

" 7 | \n",

" 8 | \n",

" world | \n",

"

\n",

" \n",

" | 2 | \n",

" 9 | \n",

" 10 | \n",

" 11 | \n",

" 12 | \n",

" foo | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" a | \n",

" b | \n",

" c | \n",

" d | \n",

" message | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1 | \n",

" 2 | \n",

" 3 | \n",

" 4 | \n",

" hello | \n",

"

\n",

" \n",

" | 1 | \n",

" 5 | \n",

" 6 | \n",

" 7 | \n",

" 8 | \n",

" world | \n",

"

\n",

" \n",

" | 2 | \n",

" 9 | \n",

" 10 | \n",

" 11 | \n",

" 12 | \n",

" foo | \n",

"

\n",

" \n",

"

\n",

"

pandas.read_csv() perform type inference. That means you don't necessarily have to specify which columns are numeric, integer, boolean, or string:"

]

},

{

"cell_type": "code",

"execution_count": 3,

"id": "ade494cd",

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"a int64\n",

"b int64\n",

"c int64\n",

"d int64\n",

"message object\n",

"dtype: object"

]

},

"execution_count": 3,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"df = pd.read_csv('examples/ex1.csv')\n",

"\n",

"df.dtypes"

]

},

{

"cell_type": "markdown",

"id": "110442cf",

"metadata": {},

"source": [

"A file will not always have a **header row**. Consider this file: ex2.csv"

]

},

{

"cell_type": "code",

"execution_count": 4,

"id": "99d8859b-28c2-4977-97c0-57250bde0449",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" 1 | \n",

" 2 | \n",

" 3 | \n",

" 4 | \n",

" hello | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 5 | \n",

" 6 | \n",

" 7 | \n",

" 8 | \n",

" world | \n",

"

\n",

" \n",

" | 1 | \n",

" 9 | \n",

" 10 | \n",

" 11 | \n",

" 12 | \n",

" foo | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" a | \n",

" b | \n",

" c | \n",

" d | \n",

" message | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1 | \n",

" 2 | \n",

" 3 | \n",

" 4 | \n",

" hello | \n",

"

\n",

" \n",

" | 1 | \n",

" 5 | \n",

" 6 | \n",

" 7 | \n",

" 8 | \n",

" world | \n",

"

\n",

" \n",

" | 2 | \n",

" 9 | \n",

" 10 | \n",

" 11 | \n",

" 12 | \n",

" foo | \n",

"

\n",

" \n",

"

\n",

"

DataFrame. You can use the index_col argument:"

]

},

{

"cell_type": "code",

"execution_count": 6,

"id": "623cae19",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" a | \n",

" b | \n",

" c | \n",

" d | \n",

"

\n",

" \n",

" | message | \n",

" | \n",

" | \n",

" | \n",

" | \n",

"

\n",

" \n",

" \n",

" \n",

" | hello | \n",

" 1 | \n",

" 2 | \n",

" 3 | \n",

" 4 | \n",

"

\n",

" \n",

" | world | \n",

" 5 | \n",

" 6 | \n",

" 7 | \n",

" 8 | \n",

"

\n",

" \n",

" | foo | \n",

" 9 | \n",

" 10 | \n",

" 11 | \n",

" 12 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" something | \n",

" a | \n",

" b | \n",

" c | \n",

" d | \n",

" message | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" one | \n",

" 1 | \n",

" 2 | \n",

" 3.0 | \n",

" 4 | \n",

" NaN | \n",

"

\n",

" \n",

" | 1 | \n",

" two | \n",

" 5 | \n",

" 6 | \n",

" NaN | \n",

" 8 | \n",

" world | \n",

"

\n",

" \n",

" | 2 | \n",

" three | \n",

" 9 | \n",

" 10 | \n",

" 11.0 | \n",

" 12 | \n",

" foo | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" something | \n",

" a | \n",

" b | \n",

" c | \n",

" d | \n",

" message | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" True | \n",

" True | \n",

" True | \n",

" True | \n",

" True | \n",

" False | \n",

"

\n",

" \n",

" | 1 | \n",

" True | \n",

" True | \n",

" True | \n",

" False | \n",

" True | \n",

" True | \n",

"

\n",

" \n",

" | 2 | \n",

" True | \n",

" True | \n",

" True | \n",

" True | \n",

" True | \n",

" True | \n",

"

\n",

" \n",

"

\n",

"

to_csv() method, we can write the data out to a comma-separated file:"

]

},

{

"cell_type": "code",

"execution_count": 9,

"id": "de1d01b3",

"metadata": {},

"outputs": [],

"source": [

"df.to_csv('examples/out1.csv')"

]

},

{

"cell_type": "markdown",

"id": "92dd432a",

"metadata": {},

"source": [

"With no other options specified, both the row and column labels are written. Both of these can be disabled:"

]

},

{

"cell_type": "code",

"execution_count": 10,

"id": "2cb0e34f",

"metadata": {},

"outputs": [],

"source": [

"df.to_csv('examples/out2.csv', index=False)"

]

},

{

"cell_type": "markdown",

"id": "10ee9c54",

"metadata": {},

"source": [

"***\n",

"### Parameters of data loading functions\n",

"\n",

"Because of how messy data in the real world can be, data loading functions (especially read_csv()) have grown very complex in their options over time. The **online pandas documentation** has many examples about how each of them works.\n",

"\n",

"API reference (pandas documentation) of read_csv(): https://pandas.pydata.org/docs/reference/api/pandas.read_csv.html\n",

"\n",

"\n",

"***\n",

"\n",

"### Reading Microsoft excel files\n",

"\n",

"pandas also supports reading tabular data stored in Excel 2003 (and higher) files using pandas.read_excel() function:\n",

"\n",

"Internally these tools use the add-on packages **xlrd** and **openpyxl** to read XLS and XLSX files, respectively. You may need to install these manually with pip."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "60202b36",

"metadata": {

"scrolled": true

},

"outputs": [],

"source": [

"df = pd.read_excel('examples/ex1.xlsx', 'Sheet1')\n",

"\n",

"df"

]

},

{

"cell_type": "markdown",

"id": "e4dbc40f",

"metadata": {},

"source": [

"To write pandas data to Excel format, you can pass a file path to to_excel():"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "d04c3dbb",

"metadata": {},

"outputs": [],

"source": [

"df.to_excel('examples/out1.xlsx')"

]

},

{

"cell_type": "markdown",

"id": "65c236ad",

"metadata": {},

"source": [

"***\n",

"\n",

"## Data cleaning and preparation\n",

"\n",

"During the course of doing data analysis and modeling, a significant amount of time is spent on data preparation: **loading**, **cleaning**, **transforming**, and **rearranging**. Such tasks are often reported to take up 80% or more of an analyst's time.\n",

"\n",

"Sometimes the way that data is stored in files or databases is not in the right format for a particular task. Fortunately, pandas provides you with a high-level, flexible, and fast set of tools to enable you to manipulate data into the right form.\n",

"\n",

"***\n",

"### Handling Missing Data\n",

"\n",

"Missing data (**NA**, which stands for **not available**) occurs commonly in many data analysis applications. For numeric data, pandas uses the floating-point value **NaN** (not a number) to represent missing data.\n",

"\n",

"With DataFrame objects, you may want to drop rows or columns that are all NA or only those containing any NAs.\n",

"\n",

"dropna() by default drops any row containing a missing value:"

]

},

{

"cell_type": "code",

"execution_count": 13,

"id": "b1526cc1",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" 0 | \n",

" 1 | \n",

" 2 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1.0 | \n",

" 6.5 | \n",

" 3.0 | \n",

"

\n",

" \n",

" | 1 | \n",

" 1.0 | \n",

" NaN | \n",

" NaN | \n",

"

\n",

" \n",

" | 2 | \n",

" NaN | \n",

" NaN | \n",

" NaN | \n",

"

\n",

" \n",

" | 3 | \n",

" NaN | \n",

" 6.5 | \n",

" 3.0 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" 0 | \n",

" 1 | \n",

" 2 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1.0 | \n",

" 6.5 | \n",

" 3.0 | \n",

"

\n",

" \n",

"

\n",

"

how='all' will only drop rows that are all NA:"

]

},

{

"cell_type": "code",

"execution_count": 15,

"id": "8e5719cb",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" 0 | \n",

" 1 | \n",

" 2 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1.0 | \n",

" 6.5 | \n",

" 3.0 | \n",

"

\n",

" \n",

" | 1 | \n",

" 1.0 | \n",

" NaN | \n",

" NaN | \n",

"

\n",

" \n",

" | 3 | \n",

" NaN | \n",

" 6.5 | \n",

" 3.0 | \n",

"

\n",

" \n",

"

\n",

"

axis=1:"

]

},

{

"cell_type": "code",

"execution_count": 16,

"id": "c943629f",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" 0 | \n",

" 1 | \n",

" 2 | \n",

" 3 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1.0 | \n",

" 6.5 | \n",

" 3.0 | \n",

" None | \n",

"

\n",

" \n",

" | 1 | \n",

" 1.0 | \n",

" NaN | \n",

" NaN | \n",

" None | \n",

"

\n",

" \n",

" | 2 | \n",

" NaN | \n",

" NaN | \n",

" NaN | \n",

" None | \n",

"

\n",

" \n",

" | 3 | \n",

" NaN | \n",

" 6.5 | \n",

" 3.0 | \n",

" None | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" 0 | \n",

" 1 | \n",

" 2 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 1.0 | \n",

" 6.5 | \n",

" 3.0 | \n",

"

\n",

" \n",

" | 1 | \n",

" 1.0 | \n",

" NaN | \n",

" NaN | \n",

"

\n",

" \n",

" | 2 | \n",

" NaN | \n",

" NaN | \n",

" NaN | \n",

"

\n",

" \n",

" | 3 | \n",

" NaN | \n",

" 6.5 | \n",

" 3.0 | \n",

"

\n",

" \n",

"

\n",

"

fillna method is the function to use. \n",

"\n",

"Calling fillna with a constant replaces missing values with that value:"

]

},

{

"cell_type": "code",

"execution_count": 18,

"id": "3edb6bc9",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" class participation | \n",

" homework | \n",

" midterm | \n",

" final | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 10.0 | \n",

" 30.0 | \n",

" 20.0 | \n",

" 40.0 | \n",

"

\n",

" \n",

" | 1 | \n",

" 8.0 | \n",

" 25.0 | \n",

" 15.0 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 2 | \n",

" 6.0 | \n",

" 20.0 | \n",

" 10.0 | \n",

" NaN | \n",

"

\n",

" \n",

" | 3 | \n",

" NaN | \n",

" NaN | \n",

" NaN | \n",

" NaN | \n",

"

\n",

" \n",

" | 4 | \n",

" NaN | \n",

" NaN | \n",

" 10.0 | \n",

" 30.0 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" class participation | \n",

" homework | \n",

" midterm | \n",

" final | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 10.0 | \n",

" 30.0 | \n",

" 20.0 | \n",

" 40.0 | \n",

"

\n",

" \n",

" | 1 | \n",

" 8.0 | \n",

" 25.0 | \n",

" 15.0 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 2 | \n",

" 6.0 | \n",

" 20.0 | \n",

" 10.0 | \n",

" 5.0 | \n",

"

\n",

" \n",

" | 3 | \n",

" 5.0 | \n",

" 5.0 | \n",

" 5.0 | \n",

" 5.0 | \n",

"

\n",

" \n",

" | 4 | \n",

" 5.0 | \n",

" 5.0 | \n",

" 10.0 | \n",

" 30.0 | \n",

"

\n",

" \n",

"

\n",

"

fillna() with a dict, you can use a different fill value for each column:"

]

},

{

"cell_type": "code",

"execution_count": 20,

"id": "f516242f",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" class participation | \n",

" homework | \n",

" midterm | \n",

" final | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 10.0 | \n",

" 30.0 | \n",

" 20.0 | \n",

" 40.0 | \n",

"

\n",

" \n",

" | 1 | \n",

" 8.0 | \n",

" 25.0 | \n",

" 15.0 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 2 | \n",

" 6.0 | \n",

" 20.0 | \n",

" 10.0 | \n",

" 30.0 | \n",

"

\n",

" \n",

" | 3 | \n",

" 5.0 | \n",

" NaN | \n",

" NaN | \n",

" 30.0 | \n",

"

\n",

" \n",

" | 4 | \n",

" 5.0 | \n",

" NaN | \n",

" 10.0 | \n",

" 30.0 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" class participation | \n",

" homework | \n",

" midterm | \n",

" final | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 10.0 | \n",

" 30.0 | \n",

" 20.0 | \n",

" 40.0 | \n",

"

\n",

" \n",

" | 1 | \n",

" 8.0 | \n",

" 25.0 | \n",

" 15.0 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 2 | \n",

" 6.0 | \n",

" 20.0 | \n",

" 10.0 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 3 | \n",

" 6.0 | \n",

" 20.0 | \n",

" 10.0 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 4 | \n",

" 6.0 | \n",

" 20.0 | \n",

" 10.0 | \n",

" 30.0 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" class participation | \n",

" homework | \n",

" midterm | \n",

" final | \n",

"

\n",

" \n",

" \n",

" \n",

" | count | \n",

" 3.0 | \n",

" 3.0 | \n",

" 4.000000 | \n",

" 3.0 | \n",

"

\n",

" \n",

" | mean | \n",

" 8.0 | \n",

" 25.0 | \n",

" 13.750000 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | std | \n",

" 2.0 | \n",

" 5.0 | \n",

" 4.787136 | \n",

" 5.0 | \n",

"

\n",

" \n",

" | min | \n",

" 6.0 | \n",

" 20.0 | \n",

" 10.000000 | \n",

" 30.0 | \n",

"

\n",

" \n",

" | 25% | \n",

" 7.0 | \n",

" 22.5 | \n",

" 10.000000 | \n",

" 32.5 | \n",

"

\n",

" \n",

" | 50% | \n",

" 8.0 | \n",

" 25.0 | \n",

" 12.500000 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 75% | \n",

" 9.0 | \n",

" 27.5 | \n",

" 16.250000 | \n",

" 37.5 | \n",

"

\n",

" \n",

" | max | \n",

" 10.0 | \n",

" 30.0 | \n",

" 20.000000 | \n",

" 40.0 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" class participation | \n",

" homework | \n",

" midterm | \n",

" final | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" 10.0 | \n",

" 30.0 | \n",

" 20.00 | \n",

" 40.0 | \n",

"

\n",

" \n",

" | 1 | \n",

" 8.0 | \n",

" 25.0 | \n",

" 15.00 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 2 | \n",

" 6.0 | \n",

" 20.0 | \n",

" 10.00 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 3 | \n",

" 8.0 | \n",

" 25.0 | \n",

" 13.75 | \n",

" 35.0 | \n",

"

\n",

" \n",

" | 4 | \n",

" 8.0 | \n",

" 25.0 | \n",

" 10.00 | \n",

" 30.0 | \n",

"

\n",

" \n",

"

\n",

"

DataFrame for any number of reasons. Here is an example:"

]

},

{

"cell_type": "code",

"execution_count": 24,

"id": "bdfbabd1",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" k1 | \n",

" k2 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" one | \n",

" 1 | \n",

"

\n",

" \n",

" | 1 | \n",

" two | \n",

" 1 | \n",

"

\n",

" \n",

" | 2 | \n",

" one | \n",

" 2 | \n",

"

\n",

" \n",

" | 3 | \n",

" two | \n",

" 3 | \n",

"

\n",

" \n",

" | 4 | \n",

" one | \n",

" 3 | \n",

"

\n",

" \n",

" | 5 | \n",

" two | \n",

" 4 | \n",

"

\n",

" \n",

" | 6 | \n",

" two | \n",

" 4 | \n",

"

\n",

" \n",

"

\n",

"

DataFrame method duplicated() returns a boolean Series indicating whether each row is a duplicate (has been observed in a previous row) or not:"

]

},

{

"cell_type": "code",

"execution_count": 25,

"id": "c24a3e2b",

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"0 False\n",

"1 False\n",

"2 False\n",

"3 False\n",

"4 False\n",

"5 False\n",

"6 True\n",

"dtype: bool"

]

},

"execution_count": 25,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"df.duplicated()"

]

},

{

"cell_type": "markdown",

"id": "101da634",

"metadata": {},

"source": [

"Relatedly, drop_duplicates() returns a DataFrame where the duplicated array is False:"

]

},

{

"cell_type": "code",

"execution_count": 26,

"id": "6ebf0302",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" k1 | \n",

" k2 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" one | \n",

" 1 | \n",

"

\n",

" \n",

" | 1 | \n",

" two | \n",

" 1 | \n",

"

\n",

" \n",

" | 2 | \n",

" one | \n",

" 2 | \n",

"

\n",

" \n",

" | 3 | \n",

" two | \n",

" 3 | \n",

"

\n",

" \n",

" | 4 | \n",

" one | \n",

" 3 | \n",

"

\n",

" \n",

" | 5 | \n",

" two | \n",

" 4 | \n",

"

\n",

" \n",

"

\n",

"

drop_duplicates() considers all of the columns; alternatively, you can specify any **subset** of them to detect duplicates.\n",

"\n",

"Suppose we had an additional column of values and wanted to filter duplicates only based on the 'k1' column:"

]

},

{

"cell_type": "code",

"execution_count": 27,

"id": "620473a2",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" k1 | \n",

" k2 | \n",

" k3 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" one | \n",

" 1 | \n",

" 0 | \n",

"

\n",

" \n",

" | 1 | \n",

" two | \n",

" 1 | \n",

" 1 | \n",

"

\n",

" \n",

" | 2 | \n",

" one | \n",

" 2 | \n",

" 2 | \n",

"

\n",

" \n",

" | 3 | \n",

" two | \n",

" 3 | \n",

" 3 | \n",

"

\n",

" \n",

" | 4 | \n",

" one | \n",

" 3 | \n",

" 4 | \n",

"

\n",

" \n",

" | 5 | \n",

" two | \n",

" 4 | \n",

" 5 | \n",

"

\n",

" \n",

" | 6 | \n",

" two | \n",

" 4 | \n",

" 6 | \n",

"

\n",

" \n",

"

\n",

"

\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" k1 | \n",

" k2 | \n",

" k3 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" one | \n",

" 1 | \n",

" 0 | \n",

"

\n",

" \n",

" | 1 | \n",

" two | \n",

" 1 | \n",

" 1 | \n",

"

\n",

" \n",

"

\n",

"

duplicated() and drop_duplicates() by default keep the **first** observed value combination. Passing keep='last' will return the last one:"

]

},

{

"cell_type": "code",

"execution_count": 29,

"id": "08c442c0",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"\n",

"\n",

"

\n",

" \n",

" \n",

" | \n",

" k1 | \n",

" k2 | \n",

" k3 | \n",

"

\n",

" \n",

" \n",

" \n",

" | 0 | \n",

" one | \n",

" 1 | \n",

" 0 | \n",

"

\n",

" \n",

" | 1 | \n",

" two | \n",

" 1 | \n",

" 1 | \n",

"

\n",

" \n",

" | 2 | \n",

" one | \n",

" 2 | \n",

" 2 | \n",

"

\n",

" \n",

" | 3 | \n",

" two | \n",

" 3 | \n",

" 3 | \n",

"

\n",

" \n",

" | 4 | \n",

" one | \n",

" 3 | \n",

" 4 | \n",

"

\n",

" \n",

" | 6 | \n",

" two | \n",

" 4 | \n",

" 6 | \n",

"

\n",

" \n",

"

\n",

"

Series has array-oriented methods for string operations that skip NA values. These are accessed through Series's str attribute.\n",

"\n",

"For example, we could check whether each email address has 'xmu.edu' in it with str.contains:"

]

},

{

"cell_type": "code",

"execution_count": 31,

"id": "2d0ec00d",

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"Dave False\n",

"Jack True\n",

"Steve False\n",

"Rose True\n",

"Tony None\n",

"dtype: object"

]

},

"execution_count": 31,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"df.str.contains('xmu.edu')"

]

},

{

"cell_type": "markdown",

"id": "71c9b6cd",

"metadata": {},

"source": [

"You can similarly **slice** strings using this syntax:"

]

},

{

"cell_type": "code",

"execution_count": 32,

"id": "3fbc0d6d",

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"Dave dave@\n",

"Jack jack@\n",

"Steve steve\n",

"Rose rose@\n",

"Tony None\n",

"dtype: object"

]

},

"execution_count": 32,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"df.str[:5]"

]

},

{

"cell_type": "code",

"execution_count": 33,

"id": "288f88a1",

"metadata": {

"scrolled": true

},

"outputs": [

{

"data": {

"text/plain": [

"Dave [dave, google.com]\n",

"Jack [jack, xmu.edu.cn]\n",

"Steve [steve, gmail.com]\n",

"Rose [rose, xmu.edu.cn]\n",

"Tony None\n",

"dtype: object"

]

},

"execution_count": 33,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"df.str.split('@')"

]

},

{

"cell_type": "code",

"execution_count": 34,

"id": "a6c9f11b",

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"Dave dave\n",

"Jack jack\n",

"Steve steve\n",

"Rose rose\n",

"Tony None\n",

"dtype: object"

]

},

"execution_count": 34,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"df.str.split('@').str.get(0)"

]

},

{

"cell_type": "markdown",

"id": "95eceec9",

"metadata": {},

"source": [

"Partial listing of vectorized string methods.\n",

"\n",

"|Method|Description|\n",

"|:- | :- | \n",

"|cat|Concatenate strings element-wise with optional delimiter\n",

"|contains|Return boolean array if each string contains pattern/regex\n",

"|count|Count occurrences of pattern\n",

"|extract|Use a regular expression with groups to extract one or more strings from a Series of strings\n",

"|endswith|Equivalent to x.endswith(pattern) for each element\n",

"|startswith|Equivalent to x.startswith(pattern) for each element\n",

"|findall|Compute list of all occurrences of pattern/regex for each string\n",

"|get|Index into each element (retrieve i-th element)\n",

"|join|Join strings in each element of the Series with passed separator\n",

"|len|Compute length of each string\n",

"|lower,upper|Convert cases;equivalent to x.lower() or x.upper() for each element\n",

"|match|Use re.match with the passed regular expression on each element\n",

"|replace|Replace occurrences of pattern/regex with some other string\n",

"|slice|Slice each string in the Series\n",

"|split|Split strings on delimiter or regular expression\n",

"|strip|Trim whitespace from both sides, including newlines"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3 (ipykernel)",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.11.4"

}

},

"nbformat": 4,

"nbformat_minor": 5

}